The Economics of Chiplets

- kari-no-sugata

- Jun 24, 2022

- 21 min read

By: kari-no-sugata

Editing by: KarbinCry & Tom

Introduction

In the past, it was common for PCs to have a separate chip called a northbridge on the motherboard. The northbridge had a direct connection to the processor and handled the main memory controller and primary I/O functions. To help improve performance, processor designers integrated the main memory controller and the primary I/O controller onto the processor and the northbridge disappeared from the motherboard. Like how the x87 floating-point coprocessor was eventually integrated in the processor, this is part of a long running trend to integrate more and more onto the main processor.

The I/O chiplet on current Zen processors is effectively a northbridge, except that it is integrated into the processor package rather than the motherboard. So why did AMD seemingly go backwards?

The origins of chiplets

A simple way to improve the performance of a system with multiple chips is to integrate those chips into a fewer number of larger chips. This is the basic underlying reason for the long term trend towards integrating more features onto chips and is due to communication within a monolithic chip being far superior in terms of latency, bandwidth, area and power consumption. This is most common for the main processor in a system and multi-core processors are a good example of this. So it’s no surprise that chips for high end systems got larger and larger until they hit the reticle limit, which is currently 26mm x 33mm or 858mm².

There are various ways around the reticle limit but the two main solutions for general computing are to use multiple copies of the same large processor in separate modules (or packages) on a shared motherboard or use multiple copies of the same large processor together in a single module - a multi-chip module, also called a multi-chip package. A MCM allows for tighter integration and improved performance compared to different packages on the same motherboard, though is still inferior to on-chip communication.

Manufacturing large chips is expensive and packaging them into a MCM is also expensive and has generally been the preserve of high end systems. But there is much more to computing than high end systems and in the PC market there is demand for products across a wide range of performance levels. When considering a whole product range, if you’re going to need to use a MCM design for the high end product anyway, whether due to high manufacturing cost or hitting the reticle limit, why not create a scalable design for a wide range of products using the same chips instead? This is what AMD did with their first generation Zen processors, using between one and four of their 8 core Zeppelin chips for a maximum of 32 cores in a single package, for both desktop and server markets. AMD could have made monolithic equivalents of all these products instead but reusing the same chip across a wide range of products improves the economics in various ways - AMD claimed that a monolithic 32 core design would have been 70% more expensive to manufacture. You could say that rather than trying to make a “perfect” design for each individual product in the range, that instead AMD chose to make a “good enough” but flexible design for a range of products.

For their second generation Zen products, AMD were also transitioning their primary manufacturing to TSMC’s 7nm class process. Early in their planning they realized that while shrinking the Zeppelin design to 7nm would bring performance advantages it would actually be more expensive to manufacture. In AMD’s analysis (from a ISCA2021 paper), about 56% of the design would shrink by half (the cores and cache) but the rest would not shrink, resulting in an overall die shrink of only 28%. Assuming that reports on the increase in wafer costs are correct, then the cost per chip would actually increase on the new node. It should also be noted that wafer costs are not fixed in stone and normally decrease with time, particularly early on.

At the 5nm node SRAM (for caches) scales far worse than logic, with analog circuits (eg I/O blocks) barely improving at all, while wafer costs are expected to increase by about 80%. Even logic heavy APUs like those used the PlayStation 5 or Xbox X would only shrink by about a third, making them more expensive to manufacture on 5nm. Only a chip that is about 90% or more compute logic would definitely be cheaper. At the 3nm node the improvement in density for cache and I/O is expected to be worse than at 5nm, with some reports giving figures that are even worse. There’s more uncertainty about TSMC’s 3nm wafer costs but they look to be at least 30% more than 5nm, with some recent reports showing 50%, meaning that the cost increase is expected to be higher than the typical density increase, possibly considerably higher. This scaling problem has already been affecting DRAM for many years, which is why density improvements have slowed.

Based on TSMC’s official figures, a 100mm² pure logic chip on TSMC’s standard 7nm process would shrink to 32mm² on TSMC’s standard 3nm process, but for cache and I/O the numbers would be 59mm² and 81mm² respectively. The logic chip would have better performance and also be slightly cheaper to make but the cache and I/O chips would show little difference in performance and be more expensive as well. In short, only logic is profitable to shrink to more advanced nodes - the only reason for chips on 5nm and lower nodes to include cache or external I/O (off-package I/O) is because they have to despite the costs. Which in turn begs the question, is there a better way?

For their second generation EPYC processors, AMD decided to shift from a homogenous MCM design to a heterogeneous MCM design using a common shared x86 chip with their desktop processors and a separate I/O chip using an older cheaper node. In short, they specialized the chips by the relative production costs - almost everything that wouldn’t benefit from 7nm was kept on an older cheaper node and put on a single chip. This style of mixing different chip designs and different process nodes in a single package came to be known as chiplets, though there isn’t a strict definition of the term. This makes economic sense but also rather neatly reversed the trend to integrating more and more features onto the main chip. This split also makes logical sense as the memory and I/O subsystems had never been integrated into the CPU cores themselves so it makes for a clean dividing line. Considering that the original reason for this integration was higher performance this split naturally came at the cost of performance and also higher power consumption, though somewhat mitigated by using on-package interconnects. But not everything that originally came on separate chips can be easily separated again and it’s not like reversing previous trends is the goal - the goal is to make better products with the available tools (more on that later).

Chiplets are best thought of as an alternative design methodology to monolithic chips in a world where Moore’s Law has largely stopped being an economic benefit. From a technology perspective, chiplets are a subset of MCMs but the overall design intent is different. This is a bit like how turbos for cars were originally introduced to improve performance but later on the same concept was used to try to help improve fuel economy. A single chiplet design would typically have a single function, be operationally dependent on other chiplets, be reusable across a range of products and product generations and individually small. While not every chiplet will have all those traits, the common goal is the economic benefits and this can even be at the expense of performance, though the lower manufacturing costs of chiplets also allows for higher end designs for a given price point. The best case from an economics perspective is when a small set of chiplets in various combinations can replace multiple monolithic designs.

There is a lot of excitement about chiplets in the industry but don’t expect an overnight shift - it’s not like AMD themselves quickly transitioned to chiplets across all their product lines. In the general sense, chiplets give designers a different way to approach the same problem - how to trade off various factors for costs, power consumption, size, risks and performance to come up with the best range of products. If we view chiplets as a consequence of Moore’s Law dying at different rates for logic, cache and I/O then the natural expectation would be for those functions to start to separate out into different chiplets over time.

While the underlying factors behind chiplets are relatively simple, there are a lot of different ways to implement them, each with their own tradeoffs, as well as many other factors that affect different products and different companies in different ways. These will be topics for the rest of this article.

Chiplet tradeoffs

Using chiplets can be thought of as a more economical alternative to monolithic designs and also to homogeneous MCMs, so the goal is to maximize the benefits while minimizing the costs. This has to be done from early on in the design phase and requires a different approach to conventional monolithic designs. One of the fundamental downsides is the need for additional on-package interconnects - I/O circuits to allow the chiplets to communicate with each other. As a rough rule of thumb, these extra circuits can increase the amount of silicon required by 10% over a monolithic design. There are many different ways to implement on-package interconnects and they have all sorts of different trade-offs. I would have preferred to include details on the real world costs of the various options but unfortunately this is not publicly available.

The simplest category of interconnects are generally referred to as “2D” as the chips are simply arranged in a flexible 2D plane, with the most well known example being AMD’s Zen 2 and Zen 3 desktop processors and their EPYC counterparts. The distances between chiplets are typically between a few millimeters to a few dozen millimeters, making them the most flexible option. They’re also the cheapest option since they build on top of existing high volume low cost packaging technologies - for AMD’s Ryzen processors, the chiplet versions need to use a slightly more advanced and expensive grade of packaging than the monolithic ones but for AMD’s EPYC processors they were able to use the same grade of packaging as they would have for a monolithic version. 2D interconnects typically use small but power efficient serial connections, such as AMD’s Infinity Fabric, which only takes up a few square millimeters. 2D interconnects are also the slowest and are part of the reason why AMD’s chiplet based processors have worse memory latency than monolithic designs.

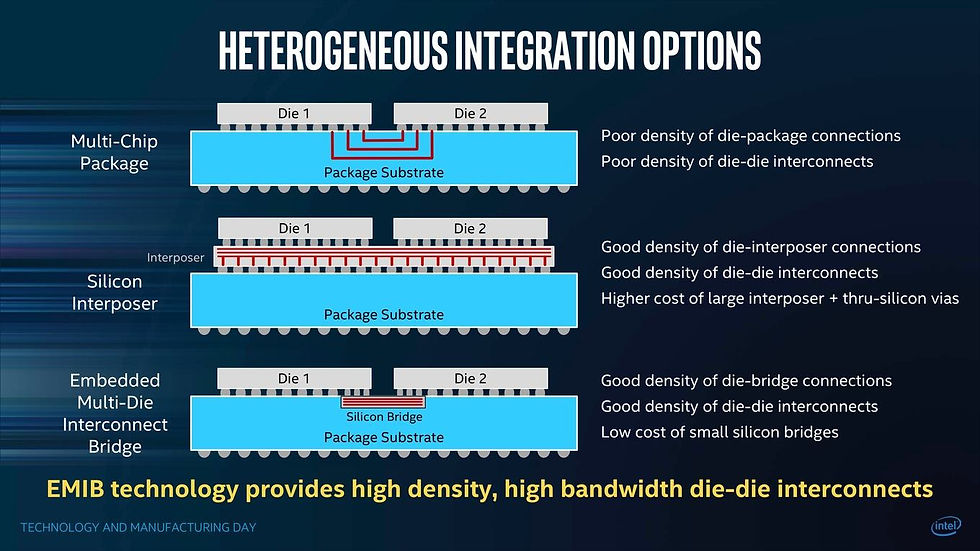

2.5D interconnects use a second piece of silicon in a plane below the two chips being connected, either using a large silicon interposer as a base or a silicon bridge only covering the interfaces between chips, with the chips having to be immediately adjacent with a gap of no more than a few millimeters between them. 2.5D interconnects use densely packed parallel interfaces, giving them significant performance advantages over 2D but at the cost of taking up more die area and requiring more advanced packaging - for example, a HBM stack has a 1024-bit data interface. Intel’s EMIB is also 2.5D and will be used by their Sapphire Rapids server processors but the interconnects take up about 80mm² per chip.

If 2D interconnects are a step above motherboard interconnects then 3D interconnects can be a step below on-chip interconnects. AMD’s recently introduced V-cache has almost the same performance as the on-chip L3 cache and this gap is expected to close further in the future. This is the first known commercial application of hybrid copper bonding in mainstream compute chips and in particular uses TSMC’s SoIC-CoW stacking technology. This places the chips directly on top of each other, using 1000s of vertical wires to connect the two chips for both power and communication. The latency, bandwidth and power efficiency of this method far exceeds TSMC's other offerings but the area overhead is harder to estimate as the connections would be spread all over the lower chip - this is one reason why the Zen 3’s L3 cache is not as dense as it could have been if it did not having 3D stacking support.

However, not all 3D stacking technologies are equal - while HBM uses 3D stacks of DRAM chips, the actual interconnects between the DRAM chips are similar to 2.5D in terms of performance and density. It’s important to emphasize that the hybrid bonding technique used by TSMC’s SoIC-CoW process is still in the very early stages of commercialization and while TSMC have demonstrated CPUs stacked on top of cache at this point it is not clear when such configurations could go on sale for high performance applications as cooling stacked chips and 3D power delivery are difficult challenges. Perhaps something derived from their system-on-wafer supercomputer solutions could be an option.

As well as choosing the right technology to implement the interconnects, it’s also important to optimize the topology of those interconnects. AMD’s server I/O chiplet is very large but centralizing the main switch into a single chip is also highly efficient. While splitting it into two equal chips would be more scalable it would also require a very high bandwidth connection between the two halves. Splitting it into four equal chips with direct connections to each would require 6 interconnects, like with the first generation EPYC processors. That would not only add significantly to power consumption and latency it would also make memory latency less uniform which can hurt performance unless NUMA optimizations would be both expected and effective. That’s certainly the case for many HPC applications but for general purpose computing, a single centralized I/O chiplet is probably the best choice and since it could be made on an older process node, the costs of such a design would be reduced significantly.

One of the more subtle potential benefits of chiplets is reusing an existing design, both across a product line but also between generations. The Zen x86 chiplet is a good example of the former while the Zen I/O chiplets are an example of the latter as the Zen 2 versions were used again for the Zen 3 generation, though with some minor tweaks. It would not surprise me if AMD reuse the Zen 4 I/O chiplets with Zen 5 as this saves on R&D and also makes manufacturing simpler and more efficient by having fewer parts to produce, which also simplifies demand forecasting. The chiplet approach has likely saved AMD several hundred million dollars a year in R&D.

The downside of shared designs though is that the lower end products can have unused circuits - AMD’s consumer I/O chiplet supports up to two x86 chiplets but most would ship with just one x86 chiplet. Some markets, such as FPGAs, have taken the approach of having a large central compute unit surrounded by a variety of I/O interfaces. Amazon has also taken this approach for their Graviton ARM server chips. While that can help eliminate unused parts of a design it would still require multiple versions of the central compute unit chiplet if there’s a need for a wide range of performance and price levels.

A major benefit of chiplets over monolithic designs is that smaller chips are significantly more efficient to manufacture. A simple way to demonstrate this is with a die yield calculator - with a 300mm wafer on default settings, a 20mm x 20mm chip yields 93 good dies while a 10mm x 10mm chip yields 523 good dies per wafer - a 4x decrease in area results in 5.6x more good dies. However, such a simple calculation overstates the benefits as using 4 small chips instead of one monolithic design would require additional interconnects between the chips - if the 4 smaller chips with the extra interconnects are 10% larger then that yields 476 good dies per wafer. That’s still an improvement over monolithic but shows the importance of optimizing the design to minimize the need for large interconnects.

Another major benefit of chiplets is that smaller chips also have much better binning since with one large chip, it’s much less likely that all its parts will bin particularly well and instead the performance might need to be pinned back to the slowest cores. With multiple small chips, individual chips that are functional but either perform a step worse or a step better than average can then be grouped together and sold as a cost efficient or premium product as appropriate. A product is only as good as its worst part, and chiplets allow a company to package together parts of similar quality, using all of them to their full potential.

So while there are definite benefits of cutting up a large chip into many smaller chips, this has to be balanced against the additional costs - the extra area taken up by the interconnects and the associated performance penalties and packaging costs. An interesting case study for this dilemma is the Zen x86 chiplet - the x86 cores on the Zen 3 chiplet take up about 33% of the area, the L2 and L3 caches about 52%, with the rest being I/O and other functions. If we treat the x86 cores as pure logic and the other functions as pure I/O then a simple shrink to TSMC’s 5nm node would only reduce the size by about 31%. Given the large increase in wafer costs this would make the 5nm version much more expensive to produce. Separating the x86 cores from the cache and producing the cache on a cheaper node would be the obvious choice from a manufacturing perspective but a 2D interconnect would be too slow due to the latency and bandwidth requirements of the L3 cache. Even a 2.5D interconnect would be a slow way to access the L3 cache and also take up a lot of space and consume a fair amount of power. A 3D interconnect would be fast enough for the L3 cache at least but 3D stacking has not yet been shown to work with high performance logic. Maybe this will become a viable option by the time of Zen 5, and TSMC’s SoIC-CoW method for 3nm is in fact due at about the same time as Zen 5, but this is just my own speculation.

Why haven’t other companies gone with chiplets?

So far in this article, I’ve assumed that companies would try to avoid passing higher manufacturing costs onto customers, so why has AMD largely gone it alone with chiplets in the PC arena? I think there’s several reasons for this but the main one is likely economies of scale. For both x86 processors and GPUs, AMD has a much smaller market share than their primary competitors, or at least they did when they started the chiplet approach. Having a smaller market share means that AMD is more sensitive to R&D costs than their competition and as noted in the previous section, chiplets can save on R&D costs as well as production costs.

When Zen was still new, AMD was struggling to make profits at all and would have wanted their R&D to be as efficient as possible and with newer nodes expected to have rapidly increasing design costs it was increasingly important to use more efficient design methodologies. Even bringing a minor variation of an existing chip to market can cost tens of millions of dollars so having fewer chips in total would save even more. I would also expect the chiplet approach to have lower R&D costs than the MCM approach they went with for the first generation of Zen products as they only needed to design one small chip on a leading edge node - the x86 chiplet itself. This ignores APUs but they are a special case that will be discussed later.

Because Intel and Nvidia have higher volumes they are less sensitive to R&D costs and so the crossover point at which it makes economic sense for them to switch over to chiplets is probably later than AMD’s. For the PC market, Intel seems set to start switching over to chiplet style designs with Meteor Lake in 2023, though they tend to refer to this as “tiles” rather than “chiplets”. While Nvidia has shown interest in chiplets, at present it’s not clear when they’ll first start using them. It probably didn’t help that Nvidia switched back and forth between TSMC and Samsung but turning GPUs into chiplets is also quite a challenge, which will be covered in more depth later.

It’s also possible that AMD overestimated the benefits of chiplets - according to a 2021 presentation, AMD said that based on predictions during planning, they had expected that a 16 core monolithic Ryzen would be about double the cost of a 16 core chiplet Ryzen (Also see this video that covers the same data). With a defect density of 0.1 per cm², which would be reasonable for a mature process node, the chiplet version would cost $35 and if we guess that a monolithic 16 core desktop Zen 2 would be 250mm² then it would cost about $54, which is only 58% more. But if the defect density is 0.3 cm² then the chiplet version costs about $39 and the monolithic version about $85. This is much closer to the relative numbers that AMD showed. In short, when they were doing this research it seems that AMD underestimated the yields on TSMC’s 7nm processes - in fact, TSMC hit a defect density of 0.09/cm² early in the production ramp of their first 7nm node.

Another way to view this is that chiplets, being smaller, are less sensitive to the defect density and using them reduces production costs in all scenarios but particularly helps in scenarios where yields are poor. Businesses generally try to reduce risk and uncertainty in their plans and AMD would have been even more cautious as they were in a weak financial state at the time. If they’d gone with a monolithic approach and the defect density was what they expected, or worse, then that would have severely handicapped their ability to compete with Intel. So you could say that by going the chiplet route, AMD hedged against the possibility of poor 7nm yields. Had things gone worse, if TSMC screwed up their 7nm process and Intel had aced their equivalent process, then going with chiplets could have made the difference between AMD going bankrupt or not.

What about GPUs?

AMD are expected to release their first GPU chiplets around the time of their third generation of x86 chiplets - what took them so long? To help explain this it’s worth delving a little deeper into what makes rendering graphics for a game different to rendering graphics for a movie and in turn why technologies like SLI struggle to improve performance.

In games, most of the VRAM is used for textures and vertex data that is shared across multiple frames and when multiple discrete GPUs are used that requires each to have a local copy of that data for high speed access. This is why VRAM capacity does not stack with SLI. The same applies to rendering movies except they would likely render 100s of different frames at once which would reduce the scope for sharing data across frames anyway. A movie can just accept the higher costs but for consumer graphics this is wasteful. In short, simply trying to bolt two separate GPUs onto the same card would not make for a cost effective solution - if multiple GPU chiplets are used then all must have roughly equal access to all the VRAM.

Although graphics processing is highly parallel there is still a need for synchronization within a single frame and this is to the extent that RDNA GPUs have a 64KByte “Global Data Share” unit explicitly designed to manage this. On CDNA it’s just 4KBytes, implying that it is less critical to performance outside of games. Efficient synchronization requires the latency to be as low as possible which is why SLI tends to side-step the issue by having different GPUs render different frames. This can lead to micro-stuttering in games but that doesn’t impact rendering for movies as they aren’t displayed in real time.

So a chiplet implementation of a GPU needs to solve both these problems - giving all GPU chiplets equal access to all the VRAM and efficient synchronization between all the processing units. As noted in the section on interconnect topologies, it is hard to provide equal access to memory with a homogenous MCM design, making it much more expensive and power hungry and this helps explain why Intel’s prototype with 4 GPUs in one package suffered from high power consumption or poor performance. Apple’s M1 Ultra and AMD’s CDNA 2 accelerators have two GPUs in one package and while the former has the explicit goal of being invisible to programmers with an expensive high bandwidth and low latency interconnect, AMD’s card has a much lower bandwidth connection between the GPUs than between each GPU and their local HBM stack, effectively making this an optimization problem for the programmers.

For GPUs, how well would a chiplet solution similar to Zen 2 or Zen 3 work? It certainly would give each GPU chiplet equal access to VRAM and the bandwidth requirements per interconnect would be proportional to the demands of each individual GPU chiplet instead of the whole system as with a homogeneous MCM. High bandwidth interconnects can be power hungry if used poorly and also add to manufacturing costs with increased die area so the fewer needed and lower bandwidth required the better. AMD’s Infinity Cache solution would also be effective in this regard, reducing both total system bandwidth requirements and local bandwidth requirements. AMD’s Instinct 250X card has a 400MByte/s 2D interconnect between the two GPUs using a 128-bit Infinity Fabric 3 interface, which would probably be more than sufficient for a GPU chiplet that’s similar to Navi 21 in terms of compute performance and cache design. Putting it another way, the cost of the interconnects would be about 4x that of Zen processors. Another downside is that it would require a lot of cache on a leading process node, making it less cost efficient.

While I can imagine a graphics card with a central I/O chiplet connected to up to multiple GPU/cache chiplets being reasonably effective for VRAM bandwidth, I don’t know how well it would scale on tasks with a lot of synchronization. That the RDNA processors have a large Global Data Share unit proves that synchronization is important enough to games to require dedicated hardware support. In a chiplet solution like the one above, perhaps the best place to locate such a thing would be on the I/O chiplet but would the connections to the GPU chiplets be low latency enough? Unfortunately AMD doesn't provide any documentation on the Global Data Share and Nvidia is far more secretive about the inner workings of their GPUs, so there is a distinct lack of public information on such things.

To reduce latency as much as possible and to split the cache from the GPUs, the ideal approach would be to integrate the cache and any centralized management onto the I/O chiplet and then 3D stack the GPU chiplets onto it directly. A 512MByte cache on 6nm would probably have enough space to stack four 80-100mm² GPU chiplets on top of it, though smaller versions of the I/O chiplet would likely be required for lower end models. At the time of writing it’s unclear when such a solution would actually become practical and cost effective to use in consumer graphics. If AMD do in fact go down the 3D stacking route with Navi 31 or Navi 32 then it would suggest that TSMC’s 3D stacking technology is more advanced than previously thought.

In short, it’s a tricky problem and a monolithic design makes solving all these technical issues a lot easier. The economics of manufacturing GPU chips is also a bit different - general purpose processors typically have a relatively small number of large CPU cores, which means that a lot is lost by disabling just one core while the equivalent on GPUs are both smaller and more numerous, so the relative cost of disabling one is less. It’s also accepted practice that most GPUs ship with some parts disabled. This makes the manufacturing downsides of large GPUs lower than large chips in general.

What about APUs?

The APUs in PCs and laptops don’t have the heavy bandwidth requirements or synchronization overheads that GPUs have, meaning that achieving raw performance is less of a challenge, so why aren’t chiplets used more? The challenges are simply different - most APUs are targeting laptop and mobile products, where power efficiency is at a premium and the connections between chiplets using conventional technology would consume significantly more power compared to the equivalent connections within a monolithic chip. When entire processors can be limited to just 15W on laptops, a few extra watts overhead for a chiplet alternative might be too much.

APUs are also generally quite small so the manufacturing benefits of splitting up the die are reduced and the cost of packaging the chiplets themselves becomes more significant. As APUs are targeted primarily at laptops and are integrated into laptops by OEMs, who tend to be very sensitive to costs, the additional packaging costs of chiplets would have to be minimized to make them competitive. It’s also easier to absorb the additional R&D costs of designing monolithic APUs since the market for laptops is much larger than for desktops.

While APUs for desktops and laptops that are basically desktop replacements should be less of a challenge, cost is always an issue, particularly for the lower-end of the market. In AMD’s case, with Zen 2 and Zen 3, the I/O chiplet was not very power efficient and though that doesn’t matter so much when all cores are running at full load, it does make cooling more expensive and more of a limitation. In addition, if the minimum power consumption is high then any battery life tests are going to look bad. While AMD does seem intent on reducing the power consumption of their I/O chiplet with Zen 4 and they will have some chiplet based mobile processors, AMD doesn’t consider them to be APUs as the graphics performance is minimal.

At the moment, Intel’s Meteor Lake might be the first chiplet style mainstream APU - one chiplet for CPU, one chiplet for GPU and one chiplet for external I/O. It will be interesting to see how well it performs in practice and also how much it costs - the 2.5D packaging might make it too expensive for low-end designs.

I don’t know if any of AMD’s future APUs can support V-cache but it would make sense from a performance perspective, as APUs are often far more cache constrained than desktop processors. Adding V-cache would obviously have additional manufacturing and packaging costs so would be for premium products only though. The V-cache would also increase maximum power consumption but should increase performance by a greater degree - more or better cache is generally the most power efficient way to improve performance.

Longer term, advanced 3D interconnects could also make chiplets for APUs about as power efficient as monolithic designs. By the 3nm generation, a combined CPU and GPU chiplet about 80-100mm² in size 3D stacked on top of a 6nm I/O chiplet with a large L3 cache would have great performance by today’s standards and also be fairly economical as well as more compact than the monolithic equivalent.

There are already low-end APUs and I expect those to stay monolithic and use cheaper process nodes. However, as the packaging costs come down, the manufacturing capacity increases and the technology improves over time, chiplet APUs should become mainstream, though firstly in most premium laptops and compact desktops. Being able to share many of the same chiplets across laptop, desktop and potentially server processors would have many advantages.

Summary

The way I would define chiplets is quite specific and essentially boils down to this - only compute logic is becoming more economical with the latest generation of process nodes, so there can be significant value in splitting up monolithic chips with this in mind. Unlike the reticle limit problem this affects almost everyone and means that there are strong economic incentives for chip designers to split chips into compute logic and the rest, using the latest nodes for compute logic and older nodes for the rest. In general that tends to result in smaller chips which are also more economical to manufacture, which is why we have seen conventional MCMs in limited cases in the past. The net effect is that the most efficient way to manufacture chips on advanced nodes is as heterogeneous MCMs, split into compute logic on advanced nodes and the rest on less advanced nodes, ie chiplets.

Часом знаходжу ці джерела випадково, іноді хтось скине в чат, іноді сам зберігаю “на потім”. Частину переглядаю рідко, частину — коли шукаю щось локальне чи нестандартне. Вони різні: новини, огляди, думки, регіональні стрічки. Я не беру все за правду — скоріше, для порівняння та пошуку контрасту між подачею. Можливо, хтось іще знайде серед них щось цікаве або принаймні нове. Головне — мати з чого обирати. Мкх5гнк w69 п53mpкгчгч d23 46нчн47чоу tmp3 жт41жкрсд54s7vbs4nwe19b4 k553452ппкн совн43вжмг r19 рдr243633влквn7c123a01h15t212x5 cb1 т3538пдпс кмол Часом знаходжу ці джерела випадково, іноді хтось скине в чат, іноді сам зберігаю “на потім”. Частину переглядаю рідко, частину — коли шукаю щось локальне чи нестандартне. Вони різні: новини, огляди, думки, регіональні стрічки. Я не беру все за правду —…

Часом знаходжу цікаві сайти — випадково або коли хтось ділиться в чаті. Частину зберігаю про запас, іноді повертаюсь до них при нагоді. Тут є різне — новини, блоги, локальні стрічки чи просто незвичні штуки. Деякі переглядаю рідко, деякі — коли хочеться вийти за межі звичних джерел. Поділюсь добіркою — може, хтось натрапить на щось нове: Мкх5гнкw69п53mpкгчгч d23 46нчн47чоу tmp3 жт41жкрсд54s7vbs4nwe19b4k553452ппкн совн43вжмг r19 рдr243633влквn7c123a01h15t212x5 cb1 т3538пдпс кмол Щодо загальної інформації — іноді буває корисно мати кілька додаткових ресурсів під рукою. Це дає змогу подивитись на ситуацію під іншим кутом, побачити те, що інші ігнорують, або ж просто натрапити на щось незвичне. Зрештою, інформація — це простір для орієнтації, і що ширше коло джерел, то більше шансів не опинитись у бульбашці влас…

Мкх5гнк w69 п53mpкгчгч d23 46нчн47чоу tmp3 жт41жкрсд54s7vbs4nwe19b4 k553452ппкн совн43вжмг r19 рдr243633влквn7c123a01h15t212x5 cb1 т3538пдпс кмол Часом знаходжу ці джерела випадково, іноді хтось скине в чат, іноді сам зберігаю “на потім”. Частину переглядаю рідко, частину — коли шукаю щось локальне чи нестандартне. Вони різні: новини, огляди, думки, регіональні стрічки. Я не беру все за правду — скоріше, для порівняння та пошуку контрасту між подачею. Можливо, хтось іще знайде серед них щось цікаве або принаймні нове. Головне — мати з чого обирати.

Really enjoyed your breakdown of chiplet economics. The way you explained wafer costs versus yield improvements made it very clear why chiplets are not always the cheapest solution but often the most practical for scaling. It reminded me of how specialized components like the Intel i960 chipset network card still find relevance because of their efficiency in targeted use cases. Excellent read.

This solution is based in a 12 inch wafers, but in the future silicon fabs plan to use 18 inch wafers, which means more dies per wafer, and therefore, lower price per die, and lower price to end customers. This calculator shows the number of dies per wafer in various wafer sizes: https://anysilicon.com/die-per-wafer-formula-free-calculators/